Sandcats.io: free dynamic DNS for Sandstorm users

By Asheesh Laroia - 18 May 2015

Sandstorm is open source server software that makes it easy to install web apps like Ethercalc or Let’s Chat. But that’s not much use if your server doesn’t have a name, and setting up DNS correctly for Sandstorm has until now been a complicated, fiddly process.

That’s why today I’m announcing sandcats.io, a free dynamic DNS service for Sandstorm users. It now takes 120 seconds to go from an empty Linux virtual machine to a working personal server, DNS and all.

The Sandstorm install script asks you what subdomain you want; if you type alice, then your server is online at alice.sandcats.io.

It’s as simple as that. Check out this 30 second ASCII screencast.

Solving DNS for Sandstorm users

I say it solves DNS for Sandstorm users because the sandcats.io service handles all the following complications:

-

Domain provisioning. You type the name you want for your server while running the install script.

-

Wildcard DNS. Sandstorm needs wildcard DNS because, with its security model, each session of each app runs on a unique unguessable subdomain. This protects against most cross-site request forgery attacks, for example. Sandcats.io provides wildcard DNS; this also means that any time a user runs a new app on your personal Sandstorm server, DNS is already configured.

-

Automatic updates. Every 60 seconds, your Sandstorm server sends a small UDP message to the Sandcats.io service. If your IP address has changed, the service replies with another short message. Upon receipt of that, your server sends an authenticated message, and sandcats.io updates its records. Our DNS records have a 60 second TTL, so if your IP address changes, it will propagate out within two minutes, and you never had to lift a finger.

-

Domain recovery. If you lose your authentication keys, you can recover the domain by typing

helpinto the Sandstorm installer.

I’d love to hear your feedback; I’m [email protected].

The code is open souce, and you can read more in the technical documentation, but what I really recommend you do is try it out.

Switch to your own domain whenever you want

The Sandcats service is optional. If you already have a domain of your own, you can join the many other people actively running Sandstorm on their own domain.

If you know you want to go it alone, the install script allows you to opt out and configure DNS yourself.

Moreover, if you start out with a

sandcats.io sudomain, and you decide you want a little more personality to

your server, you can reconfigure it at any time. Look for your server’s

sandstorm.conf file.

Install Sandstorm now to try it out

Sandstorm exists to make it easy to run web apps like Etherpad, HackerSlides, Let’s Chat, and others as easily as you install apps on your phone. If that’s something you want on a server for you or your organization, I hope you install Sandstorm right now.

First San Francisco Meetup

By Asheesh Laroia - 14 May 2015

Last Thursday, May 7, I helped organize the first event of the new Sandstorm SF Bay Area meetup group. The event was a Project Night, modeled after the Boston Python Project Nights that Jessica McKellar, Ned Batchelder, and I helped start a few years ago.

Project nights are unstructured chances for Sandstorm developers & users to work together, mentor each other, connect socially, teach, learn, or do whatever else it is Sandstorm users & developers want to do together.

I started with a brief introduction to Sandstorm, to ensure new people had some context.

After that, we laptopped and chatted. Here are some highlights from photo album.

Thanks to Jack Singleton and Thoughtworks for hosting the event, and to Ti Zhao for the wide panoramic shot.

If you’re near the San Francisco Bay, get notified of the next event and join the Meetup group!

Delegation is the Cornerstone of Civilization: Sharing in Sandstorm.io

By David Renshaw and Kenton Varda - 05 May 2015

We sometimes describe Sandstorm as: “Like Google Docs, except open source, you can run it on your own server, and you can extend it with apps written by anyone.”

Sandstorm is all about collaboration – we have apps for real-time collaborative document editing, chat rooms, even collaborative music streaming. Collaboration, of course, requires access control. Sometimes you want some people to be able to edit a document while others can only read. Up until now, Sandstorm relied on each app to implement its own access control model, and many simply did not. For example, with Etherpad on Sandstorm before now, you could only share full write access.

Today, we’re introducing Sandstorm’s new built-in sharing model. With it, apps can define a set of “permissions” (like “read” and “write”) which make sense for the app, and then Sandstorm itself will present a user interface by which the user can grant these permissions to other users. Since Sandstorm is designed to support fine-grained app containers (where, for example, each Etherpad document runs as a completely separate instance of the app in its own container), Sandstorm can implement this access control model at the container level, freeing the app of most of the work. The app need not track users itself; Sandstorm will tell it (e.g. through HTTP headers) exactly which permissions the current user has, so all the app needs to do is implement those.

Moreover, Sandstorm implements a radical capability-based sharing model, which emphasizes the importance of delegation. Under our model, if you have access to a document, you can share that access with others, without requiring the document owner to intervene. This is important, because any obstacle to delegation is an obstacle to getting work done. Marc Stiegler said it best:

The truth of the matter is: delegation cannot be prevented. The further truth of the matter is: we do not appreciate how fortunate it is that delegation cannot be prevented. Because in fact, delegation is the cornerstone of civilization. - Marc Stiegler

More on this in a bit, but first let’s look at how it works.

How it works

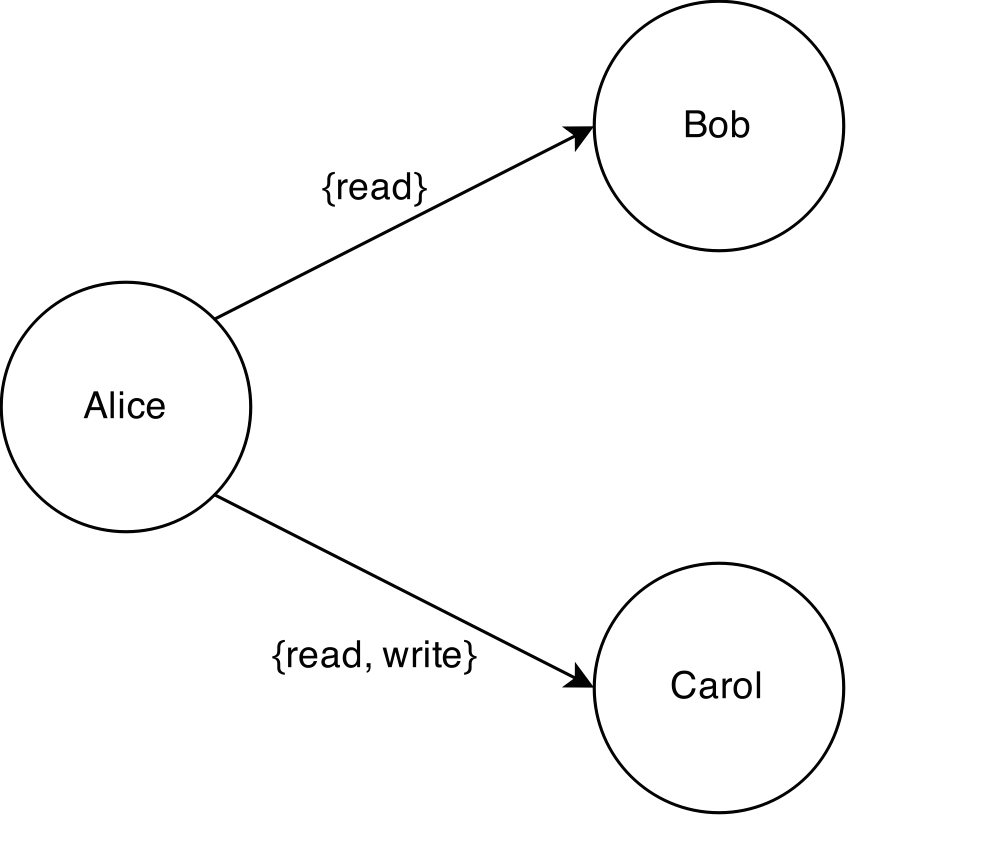

Consider the GitWeb app. With the new sharing features in place, after Alice creates a new repository she can, for example, share read-only access with Bob and share read/write access with Carol.

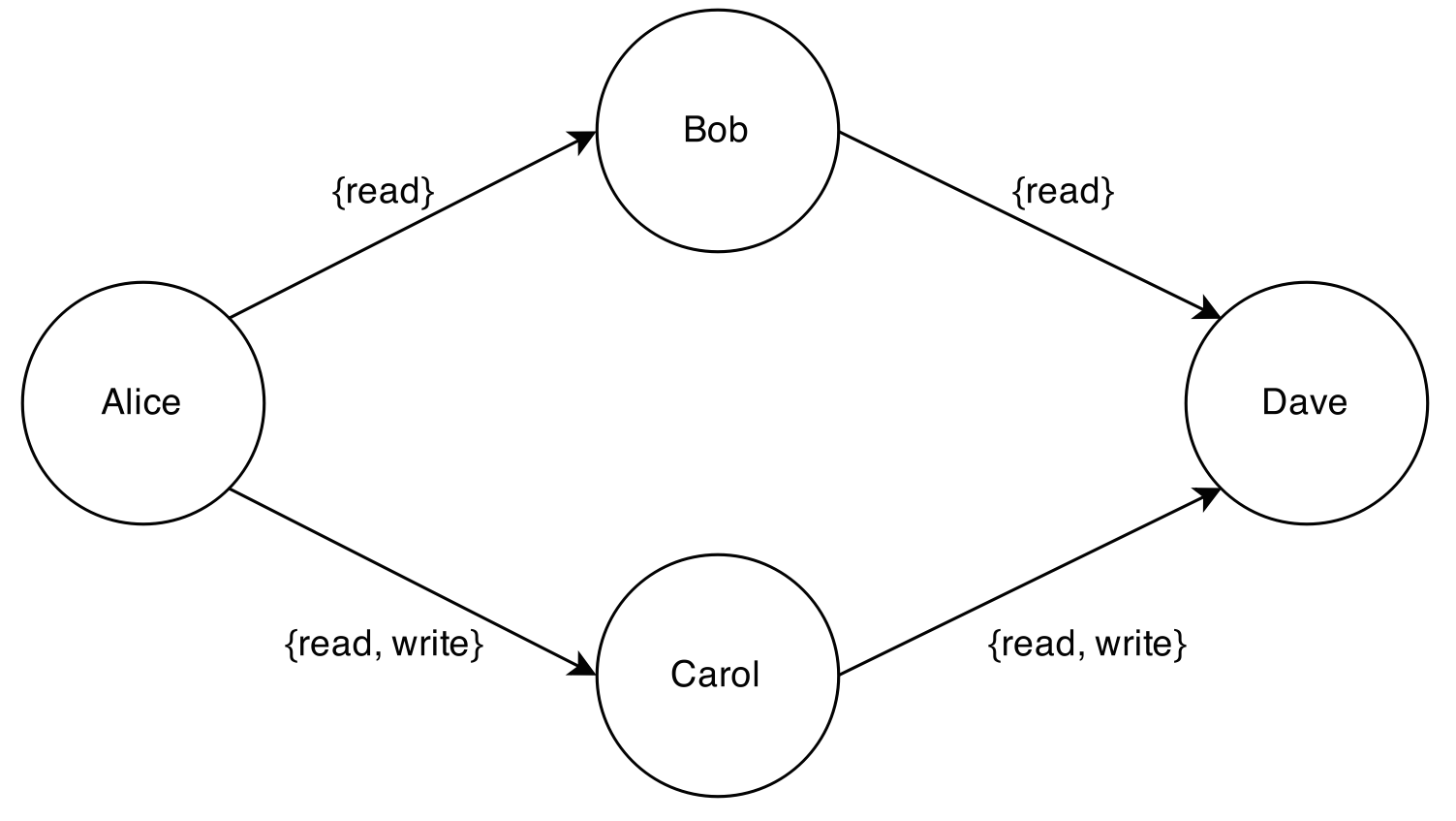

Then, in turn, both Bob and Carol can reshare the repo to other users. If they both share their full access to Dave, then Dave gets read/write access.

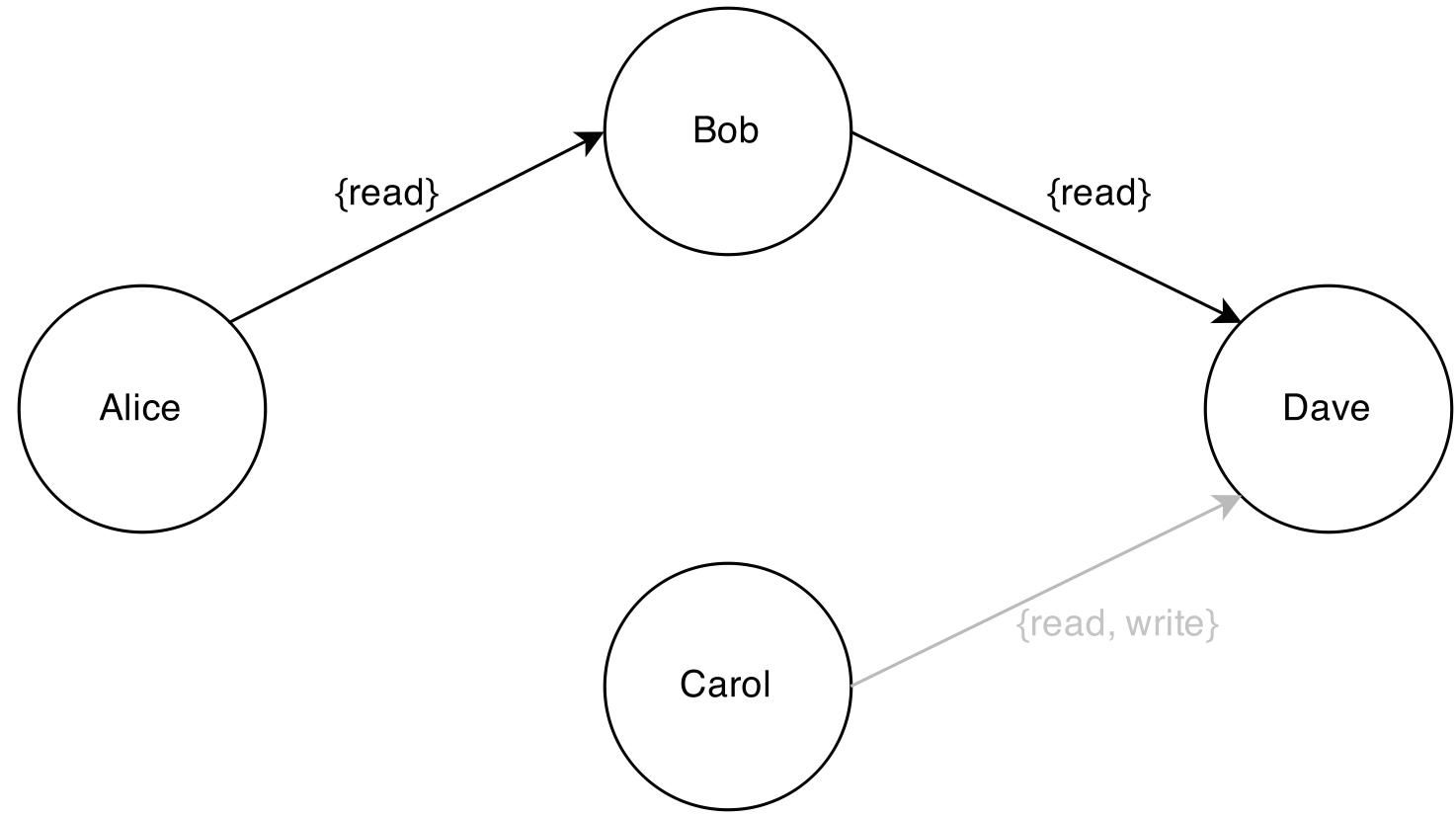

If Alice now unshares with Carol, then Carol loses all access and Dave loses write access. Dave continues to hold the read-only access that he received from Bob. Carol’s share to Dave continues to exist, but it no longer carries any permissions, as Carol herself no longer holds any permissions.

Throughout this whole interaction, the app itself has no need to keep track of users! It only needs to know how to filter requests based on permissions. Teaching GitWeb to do this was easy, requiring only a few small changes in configuration files. The app remains dead simple. The only data that it stores is the Git repository that it hosts. There is no in-app user database.

Apps have complete control over the meanings of permissions. While “can read” and “can write” permissions will be sufficient in many cases, some apps, such as Groove Basin, might support a much wider range of permissions. In our updated Groove Basin package, sharing carries such diverse permissions as “can listen,” “can control the audio stream,” “can upload music,” and “can edit tags and delete music.”

Delegation: Why you want it

Traditional access control is accomplished using “access control lists” (ACLs), in which a document owner (Alice) maintains an ACL for the document specifying exactly who has access (Bob and Carol). In many systems, Bob and Carol cannot directly add a new person (Dave) to this ACL – they must ask Alice to do it. Usually, this is treated as a security “feature”.

Preventing delegation is not a feature. It provides no security benefit, and it creates obstacles to legitimate work.

For example, say Alice has asked Carol to update the document to add some data that Carol has collected, with a deadline of 6PM. Around 4PM, Carol realizes she won’t have time, so she asks her assistant, Dave, to do the work. Unfortunately, Dave doesn’t have access, and Alice is unavailable because she’s in a meeting. Now what?

Here’s what inevitably happens: Carol copies the whole document into a new document, and then shares that with Dave. Later, when Dave is done, Carol copies the contents of the new document back into the original, overwriting it.

Or, worse, Carol just gives Dave her password.

What just happened? Carol bypassed the access control that prevented delegation to Dave! In fact, she did so trivially; no “hacking” required. It is obvious, in fact, that there is no technology which would prevent Carol from delegating access to Dave, except to cut off all communications between Carol and Dave, which would be silly. So, our security “feature” actually provided no security benefit.

With that said, what Carol did was annoying and error-prone. It took time, and the document could have been damaged, especially if someone else were editing at the same time and had their changes overwritten. It would have been much better if Dave could have simply had direct access to the document.

So, that’s what Sandstorm allows.

Policies and Auditability

There is an objection to unrestricted delegation which has some merit: preventing delegation can help guard against accidental leaks. For example, I might share a sensitive document with a coworker for review, but I might not trust that my coworker really understands the sensitivity of the document. I trust my coworker not to violate my wishes, but my coworker might not understand that I don’t want them to reshare. I’d feel a lot more comfortable if I can simply prohibit resharing. Yes, they can always go out of their way to get around any barrier I put up, but hopefully it will be obvious to them that they shouldn’t.

This is a valid point, but solving it via the heavy-handed ACL model is overkill. As a user, what I really want is not a draconian security wall, but two things:

- A way to inform people of my wishes with regards to resharing.

- A way to hold them accountable if they break my wishes.

Sandstorm aims to provide both of these:

- You’ll be able to express “policies” when sharing, such as “please don’t reshare.” When people attempt to anyway, they will be informed that this is against your wishes, and perhaps blocked (at the UI level) from doing so. This is not strictly a security feature, but simply risk management: it helps nudge people in the right direction.

- Sandstorm tracks not just a list but a full sharing graph. This means it is always possible to tell not just who has access but how they got access. We will be developing visualizations and auditing tools so that when you discover someone has received access that shouldn’t have, you can find out whom to blame. And as mentioned above, if you revoke that person’s access, then everyone they reshared to transitively loses access – unless you decide to restore those connections by sharing with those people directly.

For enterprise users, we will further develop features that allow system administrators to set policies and audit access across an organization.

Many users may never need these features. That’s fine – the basic sharing interface works intuitively without requiring you to understand “sharing graphs” or “policies”. But for those who need it, especially in a business setting, these features will be invaluable.

Try it now!

Sharing is a new feature and we still have lots of work to do on it, but the initial version is already live. If you want to try sharing but have not yet set up your own server, you can use the demo. We’ve already enabled nontrivial permissions on GitWeb, Groove Basin, and Etherpad, and more app updates will be coming. Better yet, try writing an app that takes avantage of the new features. Enabling apps to easily support sharing is just one more way that Sandstorm makes open source web apps viable.

Introducing Drew and SF Meetup

By Drew Fisher - 04 May 2015

Hi! I’m Drew Fisher, and I’m thrilled to announce that I’m the newest sandcat on the block! I’ll be working on various parts of Sandstorm’s software platform, developer tools, and user experience.

A little bit about me:

- I’ve done triage for KDE, maintained Free Software reverse-engineered drivers for the Kinect depth camera and Microsoft Touch Mouse, and given a talk at the 28th Chaos Communication Congress.

- While at Texas A&M University, I led the Texas A&M University Collegiate Cyber Defense Competition team to nationals, ran a campus mirror of a number several Linux distribution archives, and was active in the local Linux Users Group.

- At UC Berkeley, I earned my MS in computer science studying security and human-computer interaction under Dave Wagner.

- I’ve built secure, private, and user-friendly collaboration tools at AeroFS.

- In my free time, I play and write puzzlehunts, fiddle with servers, bike, play board games, and enjoy eating delicious foods!

One of the reasons that I find Sandstorm so compelling is that I care deeply about security and privacy, but also about making delightful user experiences. These goals are frequently in conflict with each other, but Sandstorm is remarkable in that its design enables us to provide real security – isolation between orthogonal concerns, sandboxing, protections against whole classes of attacks – while also making that security useful and meaningful to users, by staying out of the way of user workflows, minimizing cognitive load, and capturing user intent instead of asking tons of questions.

Meetup on May 7

I’ll be at the first Sandstorm Meetup this Thursday, May 7, in San Francisco, so if you’re in the area, come visit the Thoughtworks office at 315 Montgomery from 6pm - 9pm! I look forward to meeting some new faces!

Is that ASCII or is it Protobuf? The importance of types in cryptographic signatures.

By Kenton Varda - 01 May 2015

So here’s a fun problem. Let’s say that a web site wants to implement authentication based on public-key cryptography. That is, to log into your account, you need to prove that you possess a particular public/private key pair associated with the account. You can use the same key pair for several such sites, because none of the sites ever see the private key and therefore cannot impersonate you to each other. Way better than passwords!

Background: Asymmetric Cryptography

Asymmetric encryption algorithms (ones with public/private key pairs) generally offer one or more of the following low-level operations:

- Encryption: Someone who has your public key can encrypt a message that can only be decrypted using your private key (i.e. by you). RSA implements this. Also, asymmetric encryption can be built on key agreement algorithms.

- Signing: Using your private key, you can “sign” a message, and other people can verify the signature using your public key. RSA, DSA, Ed25519, and others implement this.

- Key Agreement: Using your private key and someone else’s public key, you can derive a new unique key. The other person can derive the same key using their private key and your public, but no one who doesn’t possess at least one of your private keys can derive the key. Thus, you can use this key as a symmetric key to encrypt messages between you. This is called “Diffie-Hellman key exchange” and algorithms include the original Diffie-Hellman algorithm and ECDH (e.g. Curve25519).

Asymmetric Key Authentication

Let’s say your keys are RSA (probably the most common case today). To log in, you need to prove to the site that you have possession of your private key. How might we use the available operations (encrypt and sign) to do this? Keeping in mind that we must make sure someone snooping on a previous login cannot simply reply those past messages to log in again, we might propose:

- Encrypt: The web site generates a random unguessable value, encrypts it with your public key, then sends the encrypted message to you. You decrypt the value and send it back, proving to the site that you possess the private key.

- Sign: The web site generates a random unguessable value and sends it to you. You sign the value with your private key and send it back. The site can verify this signature with your public key.

Both basic approaches are commonly used for authentication. SSH, for example, used the encryption approach in v1, then switched to the signature approach in v2, in order to accommodate non-RSA key types. But the protocols used in practice are more complicated that described above, because…

The Obvious Problem

There is huge problem with both approaches! In both cases, the web site controls the “random value” that is signed or the message to be decrypted. You’ve effectively given the web site complete access to your key:

- Encrypt: Instead of encrypting a newly-generated value, the site could send a message created by someone else that was encrypted for you. If you decrypt it and send it back without looking, the site gets access to this encrypted content which it wasn’t supposed to be able to read.

- Sign: Instead of a random message, the site could send you a specially-crafted message that has meaning to third parties. For example, the site could send you the digital equivalent of a bank check. If you sign it without looking, the site could go ahead and extract money from your account, or other bad things.

(Of course, both of these problems can trivially be exploited to allow an evil web site operator to secretly log into some other web site as you, by relaying the login challenge from that other site.)

So what do we do about this?

Be more careful?

Let’s focus on the signature case. Naively, you might say: We need to restrict the format of the message the site presents for signature, so that it cannot be misinterpreted to mean anything else. The message will need a random component, of course, in order to prevent replays, but we can delimit that randomness in ASCII text which states the purpose. (In particular, it’s important that the text specify exactly what web site we’re trying to log into.) So, now we have:

z.example.com login challenge:1Z5ns8ectRGTMNYz3NHdB 699a674d30fc3bd42ec3619cfab13deb

Here is a message that could not reasonably be interpreted as anything except “I want to log in to z.example.com”, right? It’s safe to sign, right?

The Subtle Problem

I’m sorry to say, but there is still a problem. It turns out that message isn’t the ASCII text it appears to be:

$ echo -n 'z.example.com login challenge:1Z5ns8ectRGTMNYz3NHdB 699a674d30fc3bd42ec3619cfab13deb' \

| protoc --decode=Check check.proto

toAccount: "699a674d30fc3bd42ec3619cfab13deb"

amount: 100

memo: "example.com login challenge:1Z5ns8ectRGTMNYz3N"

Oops, it appears you just signed a bank check after all – and you were trying so hard not to! The check was encoded in Google’s binary encoding format Protocol Buffers – we were able to decode it using the protoc tool. Here is the Protobuf schema (the file check.proto that we passed to protoc):

message Check {

required string toAccount = 8;

// 32-byte hex account number

required uint32 amount = 9;

// Dollar amount to pay.

optional string memo = 15;

// Just a comment, not important.

}

Thanks for your $100. Hope you have fun on z.example.com.

The trick here is that, using my knowledge of Protobuf encoding, I was able to carefully craft an ASCII message that happened to be a valid Protobuf message. I chose Protobufs because of my familiarity with them, but many binary encodings could have worked here. In particular, most binary formats include ways to insert bytes which will be ignored, giving us an opening to insert some human-readable ASCII text. Here I pulled the text into the memo field, but had I not defined that field, Protobuf would have ignored the text altogether with no error.

Is this attack realistic? If the person designing the Check protocol intended to make the attack possible, then absolutely! No one would ever notice that the field numbers had been carefully chosen to encode nicely as ASCII, especially if plausible-looking optional fields were defined to fill in the gaps between them. (Of course, a real attacker would have omitted the memo field.)

Even if the other format were not designed maliciously, it’s entirely possible for two protocols to assign different meanings to the same data by accident – such as an IRC server accepting input from an HTTP client. This kind of problem is known as inter-protocol exploitation. We’ve simply extended it to signatures.

Now what?

Here’s the thing: When you create a key pair for signing, you need to decide exactly what format of data you plan to sign with it. Then, don’t allow signatures made with that key to be accepted for any other purpose.

So, if you have a key used for logging into web sites, it can only be used for logging into web sites – and all those web sites must use exactly the same format for authentication challenges.

Note that the key “format” can very well be “natural language messages encoded in UTF-8 to be read by humans”, and then you can use the key to sign or encrypt email and such. But, then the key cannot be used for any automated purpose (like login) unless the protocol specifies an unambiguous natural-language “envelope” for its payload. Most such protocols (such as SSH authentication) do no such thing. (This is why, even though SSH and PGP both typically use RSA, you should NOT convert your PGP key into an SSH key nor vice versa.)

Another valid way to allow one key to be used for multiple purposes is to make sure all signed messages begin with a “context string”, for example as recommended by Adam Langley for TLS. However, in this case, all applications which use the key for signing must include such a context string, and context strings must be chosen to avoid conflicts.

But probably better than either of the above is to use a different key for each purpose. So, your PGP master key should not be used for anything except signing subkeys. Unfortunately, PGP subkeys do not allow you to express much about the purpose of subkeys – you can only specify “encryption”, “signing”, “certification”, or “authentication”. This does not seem good enough – e.g. an “authentication” key still cannot safely be used for SSH login because a malicious SSH server could still trick you into signing an SSH login request that happens to be a valid authentication request for a completely different service in a different format. (Admittedly, this possibility seems unlikely, but tricking an HTTP client into talking to a server expecting a completely different protocol also seems unlikely, yet it works e.g. with IRC. We can’t really prove that no other protocol is designed exactly wrong such that it interprets SSH’s authentication signatures as something else.) Alas, in practice, SSH is exactly what the “authentication” subkey type is used for! So, perhaps SSH should be considered the de facto standard format for PGP’s “authentication” keys, and if you implement an authentication system that accepts PGP keys designated for authentication, you should be sure to exactly match SSH’s authentication signature format.

Similarly, X.509 certificates have the “extended key usage” extension to specify a key’s designated purpose, but the options here are similarly limited.

Another line of thought says simply: “Don’t use signatures for anything except signing certificates.” Indeed, signing email is arguably a bad idea: it means that not only can the recipient verify that it came from you, but the recipient can prove to other people that you wrote the message, which is perhaps not what you really wanted. Similarly, when you log into a server, you probably don’t want that server to be able to prove to the rest of the world that you logged in (as SSH servers currently can do!). What you probably really wanted is a zero-knowledge authentication system where only the recipient can know for sure that the message came from you (which can be built on, for example, key agreement protocols).

In any case, when Sandstorm implements authentication based on public keys, it will probably not be based on signatures. But, we still have more research to do here.

Thanks to Ryan Sleevi, Tony Arcieri, and Glenn Willen for providing pointers and insights that went into writing this article. However, any misunderstandings of cryptography are strictly my own.